Typically when you apply for funding, you can’t see what others are submitting or get a sense of how big the competition is. It’s pretty unusual to have an active role in decision-making you can be excited by, that isn’t a dismal general election.

Q Exchange isn’t just any ordinary grant programme. It’s deliberately designed so that members can do the things you don’t ordinarily get to see and do. It gives members the opportunity to interact and deliberate about different improvement ideas that they feel strongly about in health and care. The concept isn’t necessarily a novel one, but it’s a shift in the traditional world of grant giving. And for the second time this year, we did exactly that, except this time we made it even more accessible, inclusive and member-led so that there was a sense of responsibility shared among the sizable community. For me, that’s also why it feels important to share what we learnt about how people voted this year in Q Exchange.

Applications to Q Exchange this year

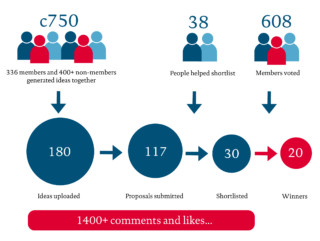

During the application stage, 180 ideas were put forward across the two themes, with nearly 750 people – both Q and non-Q members – involved in projects. Over the course of two months and hundreds of comments made between members, 117 of those ideas were converted into a formal proposal. Two-thirds of the ideas were in the building improvement capability category, and the remaining third in the outpatient’s category. Submissions were reviewed by assessor panels made up of members, and shortlisted to 18 building improvement capability projects and 12 outpatient projects. These final 30 shortlisted projects went on to the community vote and the top two-thirds from each category were selected for funding – 8 from outpatients and 12 from building improvement capability.

How did the community vote?

The community vote was open for two weeks in October. 608 (18%) of the 3,377 eligible members took part in voting. Members were asked to vote for their top three favourite projects from each category. What was particularly encouraging to see from the feedback was how members engaged with the voting process in earnest. Members reported that they spent on average between one and two hours reading and deciding who to vote for, which is no mean feat considering there were 30 shortlisted projects to choose from.

Currently about 70% of Q members have non-clinical primary roles with the remaining 30% working as clinicians, and this proportion was broadly reflected across those that voted. It was good to see that, when looking at patients, carers and service users, 23% of the eligible members voted. Our data also showed that there was slightly better engagement from those that have joined Q in the past year, with 22% turnout among this group.

When comparing by country, on average, there was slightly higher representation from England and Wales, and lower representation from Northern Ireland and Scotland. I’d be keen to explore why this was the case and how we can improve this for next year, given we tried to resolve the geographical barriers to voting that we saw in last year’s Q Exchange.

What influenced people’s decision making?

We asked members to fill in an optional survey to help us understand what influenced their decisions as well as suggestions for how we can improve the process for next year. It was the first time we opened voting remotely so it was really important that we captured this feedback.

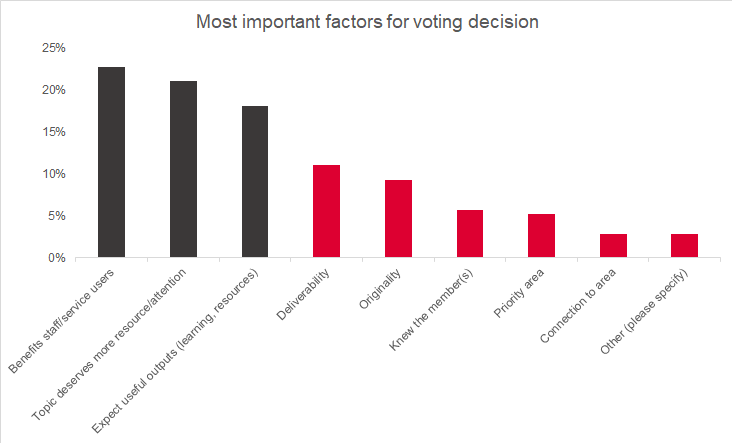

Last year we spoke to PB Network, the advocates for learning and innovation in Participatory Budgeting, to get advice on voting behaviours to help inform the rules for Q Exchange. They suggested that members were more likely to vote for their own / known project, organisation and location. For this reason, we asked members to vote for six projects to avoid bias. We were surprised to see that the top three factors for voting weren’t necessarily voting for who they knew or where they’re based, suggesting that it isn’t about a popularity contest . The decisions that were most important to members were related to the types of project and its intended impact (highlighted by its potential to benefit staff or service users) the topic deserving more attention and resource, and the outputs likely to be most useful.

The winning projects

The 20 winning projects are spread across the UK and the impact of many of these projects will be felt UK wide. Looking at the projects that were successful, it seems as if projects that were able to demonstrate benefits to the community and the wider system fared well. The winning ideas also show the potential for cross-boundary working, and cover a variety of settings, including acute care, ambulance care, primary care networks and even prisons. This is useful learning for next year when looking at how members communicate and structure their applications in future rounds.

Q Exchange demonstrates the potential to do something that is truly open, supportive and democratic at a large scale. We know from 2018 that there are benefits to running a collaborative funding programme like this as it allows all participants to gain something throughout the process. Last year, several ideas that didn’t receive funding went on to continue building and delivering their projects by making the most of the connections, feedback and learning they gathered from Q Exchange. In fact, they’ve been sharing their stories through the feedback webinars we’ve been hosting over the past month.

I’m really excited to work with the 20 winning teams to see how their projects unfold, but I’m also excited to learn what other teams will be doing, and how they will develop their ideas over the coming months. Congratulations, and thank you to everyone that took part in Q Exchange this year!