In November 2020, the Q team delivered the annual Q community event in a virtual format. Joined by over 600 members from across the UK and Ireland, we came away from the two-day event energised and excited. But just as running virtual events at this scale was new to us in 2020, so was evaluating them. I know that many community members have faced the challenge of creating engaging online events and virtual spaces for collaboration over the last year, so I wanted to share some insight we gained through this process.

Here are six things we learned from our experience of evaluating the success and impact of an online event.

Beware of data overload

Clear evaluation questions helped us find a way through the vast amounts of data, and to prioritise where to put our energy.

When we’re online, we produce more data than when we attend physical events. From Zoom polls to chat sessions, every activity is a data collection opportunity – plus we also gathered all important in-depth qualitative feedback from a smaller number of attendees. This should be a blessing but can also be a curse if you aren’t able to prioritise what’s important. We realised early on that we didn’t have time to sift through every data point. Clear evaluation questions helped us find a way through the vast amounts of data, and to prioritise where to put our energy. If you’re evaluating your own event, start by identifying your key questions, and then link to data that can answer this question – otherwise you risk drowning in data.

The rules of engagement are different online

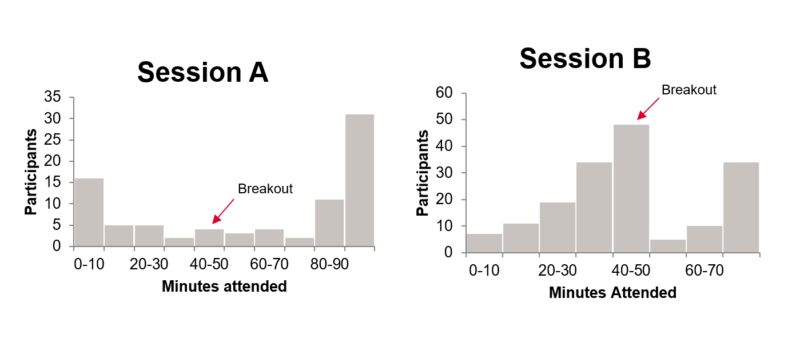

We learned a lot more about engagement behaviour than we would for in-person events. For example, we encouraged busy delegates to “dip in and out” as required – and attendance patterns showed that delegates did so, particularly at the end of a segment. It was clear that the online platform made it easier for people to engage in this way, and we learned some key lessons from this data – including that Zoom’s ‘breakout’ function doesn’t suit everyone. Notably, this effect was not obvious in all sessions; the patterns below show that whereas in one session there was stability in numbers until the end of the session, in the other there was a disengagement spike between 40 and 50 minutes. Establishing early on what type of engagement you’re hoping for allows you to create a permission structure beforehand and build it in to your event messaging.

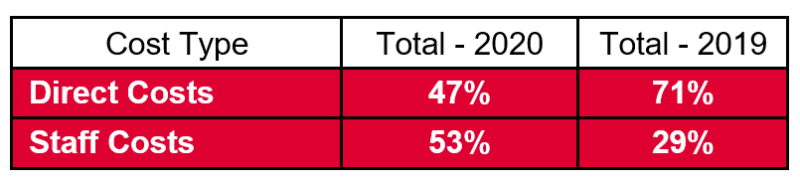

Time is a cost

Considering venue, catering, travel, and other expenses, it would be easy to assume that in-person events are far more resource-intensive to run. However, our evaluation in 2020 showed that staff time is an easily overlooked cost during online event planning, and this year made up over half of all costs incurred. This information enabled us to start having conversations about what appropriate staff resourcing looks like, and to fully recognise that the impact was substantial; without collecting data for this, we would have been left guessing. We recommend keeping track of this, because staff time may be your greatest resource – and we found that the final numbers can be surprising.

Surveys are not enough

The first thought for evaluation is often to just do a survey. Surveys are a useful tool after your event finishes, but it’s during the event that you have the largest captive audience. With this in mind, we integrated polls and other data collection during the event, making us less reliant on people answering a survey afterwards. We took five minutes at the end of every session to poll attendees for their feedback using standardised questions for comparison. Building in this time can also allow for a short ‘after-action review’, where delegates can say what worked well, and what could have been even better, using the chat. Looking back, we could have used this time better and emphasised this element more as a key mechanism for improving quality.

Impact is hard to demonstrate

You can do all the above and more, and it still may not be realistic to demonstrate impact by evaluating a one-off event. In many cases it will be better to consider impact in the context on a broader evaluation. For us, that means thinking about the role our community event plays in achieving impact through the Q community. You can also consider your Theory of Change and be grounded in what the evidence tells us about the type of change these types of events can create, in order to reach achievable expectations.

Sharing, Comparing, Learning

Finally, you need a reference point; without one it can be hard to say anything very meaningful about the success of your event, even where you have lots of data. Refer back to any precedents you already have; online if possible, but in-person comparisons are still useful. For example, how does your delegate feedback compare to in-person events? How do the costs compare? We compared costs across years, and used similar survey questions to previous events; it really helped give a grounding of ‘what good looks like’. We plan to use some of the same measures in future years to continue comparing.

What next?

Ultimately, it’s good to acknowledge that evaluating usually in-person events is a new challenge for many of us. We are still figuring out how people engage online at a time when face-to-face events are not possible, and how our design can support with that. But by sharing and comparing our learning we can grow a body of knowledge to help us all in navigating the relatively new space of online-only events.

As we move through 2021, we’ll develop how we design events based on what we’ve learned and will continue to share our learning as we go. We’d also love to hear from you on what you’re doing to evaluate your online events. What’s worked and what hasn’t, and what have the challenges been? Share your thoughts in the comments below.

Comments

sharon wiener-ogilvie 22 Feb 2021

Hi Henry, thanks so much for sharing your learning from the evaluation of the Q event- really interesting points for us to take into our work and which I think are also relevant for running online break through collaboratives . thanks for taking the time to share learning

marie innes 22 Feb 2021

Very helpful pointers - thanks for sharing

Henry Cann 23 Feb 2021

Thanks both. Sharon, do let us know how the collaboratives go!

Kuldip Nijjar 22 Feb 2021

Thank you for sharing Henry. Its very interesting seeing the engagement comparisons and, you are correct in your observations around the amount of data we could access but ask ourselves do we really need it ?

Henry Cann 23 Feb 2021

Thank you Kuldip. I agree that the amount of data is a potential 'trap' to get lost in. I think it also raises questions about ethics and proportionality - because you should only ever collect what you can really make use of.

Kate Phillips 23 Feb 2021

Super helpful- thank you for sharing. I hadn't considered comparing the feedback to a face-to-face event. I'm going to see what the differences are.

I also like your points about using the polls and chat box for feedback. I wonder though how anonymity affects feedback? Did you get the impression that this hampered the responses? We've been dropping a survey monkey link into the chat box 5minutes before the end of online events, never get 100% but often 70%+ responding. It's been great to have a look through the ideas for improvement etc straight after the session with the facilitators. Very helpful for iterative improvements during a course.

Henry Cann 23 Feb 2021

Thanks so much Kate, I'm glad you found this useful.

I think you're right about anonymity - I think that chat feedback has an important place as a constructive feedback opportunity - for suggesting improvements but without criticising too heavily; for anything more substantial I think the anonymous survey is more appropriate. That's why a productive focus on 'what worked well', and 'what could be even better' can be a good framing.

Polls, particularly quant scores do need to be caveated - after all, who is left at the very end of your session? It's likely to be those people who have had a positive experience, and so the feedback could again be tilted.

70% is great, by the way!

Ruth Reid 26 Feb 2021

Thanks for sharing Henry! Really interesting to see the effect of breakouts which explains a few issues we have had. Which platform did you use for the event- thinking of planning something similar on a much smaller scale and trying to decide what would be most effective ( and affordable!).

Evelyn Prodger 13 Mar 2021

Really interesting read

Thanasis Spyriadis 30 Sep 2022

Hi Henry,

Thanks a lot for sharing these reflections. They are very useful indeed.

Although in-person events are becoming popular again, we continue organising some virtual events mostly to broaden our geographic reach, etc.

My main challenge at the moment, in addition to the points that you raised, is how can we make the evaluation more creative and engaging for attendees. Can virtual event evaluation be more fun and entertaining, while producing useful data of course? Do you happen to have any experience on this?

Thanks in advance for your time.

Best wishes,

Thanasis

Henry Cann 3 Oct 2022

Thanks so much for leaving a comment Thanasis! It's timely since we have our 2022 event coming up in a few weeks! I'd be happy to connect directly on this

My quick thoughts; I think this is definitely true that evaluation has more potential now to be engaging and there are new technologies to make the most of this, while also not changing the need to make sure that the evaluation design is still taking account of known online behaviour patterns, e.g earlier drop-off being easier than for in-person events due to reduced social desirability bias etc.

The things I'm focusing on at the moment are:

I do nonetheless think the likes of Sli.do and Kahoot are giving new options for engaging participants and make it feel like less of a chore. There's also great potential for gamification, e.g with Gather.town, and then visual whiteboards such as we use frequently in Q, i.e Miro (and then Jamboards)

Being honest, I still haven't cracked the issue of surveys and how to ease the burden on participants. Sli.do is more creative but doesn't entirely solve the problem - let me know if you have any brainwaves!

Happy to chat further,

Henry

Thanasis Spyriadis 4 Oct 2022

Hi Henry,

Thanks for your reply.

I would be interested to learn more about the upcoming event that you mentioned. Admittedly, my evaluation expertise is not on the health sector, but mostly from the areas of arts and culture, Higher Education, as well as tourism destination development. That said, am keen to explore any information that may be interesting, as there are clearly common challenges and opportunities in the evaluation work that we do across various contexts. I even considered joining the Q Evaluation SIG, but I think that is more directed towards health sector professionals.

I like your point about making evaluation an extension of the conversation / session rather than an add-on to an event. I guess one challenge in this is making the value that evaluation adds to the online event more relevant or applicable to the audience, in a timely manner.

Thanks for suggesting some options for gamification and more fun audience engagement. I will play around with them a bit to see if they can help me.

Best wishes,

Thanasis