UK Evaluation Society Council member and Q Evaluation SIG convenor Emma Gibbard initiated a day-long training course recently, led by Kate McKegg, the co-author of the book Developmental Evaluation Exemplars and co-founder of the Developmental Evaluation Institute. Participants came from the Q community, UK Evaluation Society and the Health Foundation.

In this two-part recap I’ll do my best to convey this complex and multi-faceted new approach, that represents a major paradigm shift in evaluation – and share some highlights from what proved to be an inspiring and engaging workshop.

What is Developmental Evaluation?

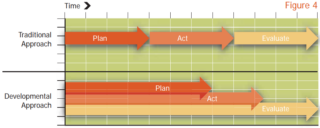

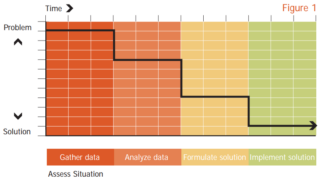

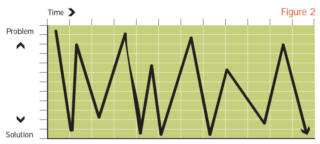

Developmental Evaluation (DE) represents quite a shift from the conventional linear and sequential approaches to evaluation, which are best suited to simple – often technical – types of problems. It’s not meant to replace the dominant approaches – formative and summative evaluations – but fills a gap, where social innovators are bringing about system change under conditions of complexity.

DE principles were used by RAND Europe in the evaluation of the Q community itself.

We can’t solve ‘wicked’ problems with a programme

– Kate McKegg

The developer of the DE approach, Michael Quinn Patton, helps us understand which evaluation approach to use in different situations: “Is the subject of the situation being crafted (developmental), refined (formative) or judged worthy of sustaining or scaling up (summative)?”

Patton offers this further definition of DE: “An approach to evaluation grounded in systems thinking and that supports innovation by collecting and analysing real-time data in ways that lead to informed and ongoing decision-making as part of the design, development and implementation process” – though it’s worth noting that DE can still help in formative and summative phases.

So DE is for complex situations where the usual linear approaches don’t help. As DE trainer Kate McKegg explained at the outset, we can’t solve ‘wicked’ problems with a programme.

DE is a tricky paradigm shift for everybody. You need to be prepared to have tough and courageous conversations

– Kate McKegg

DE is real-time and helps people make day-to-day decisions, testing their latest hunches against the data: suggesting new experiments to try and which directions to abandon. It records opportunities, tensions, unintended consequences and makes decision-making more transparent.

How does it work in practice?

The DE evaluator will be embedded, rather than sitting independently, outside of the project team. “So often, evaluators fly in and fly out, and the final report may be perceived by those in the community or project as inaccurate, and a misrepresentation of the value of what is being done on the ground,” Kate McKegg explained.

If nothing is developed, it has failed

– Jamie Gamble, A Developmental Evaluation Primer

A lot of evaluation reports aren’t all that evaluative, with the reasoning from data and evidence to judgement about value not all that rigorous or transparent.

“DE is a tricky paradigm shift for everybody. You need to be prepared to have tough and courageous conversations with everybody in the initiative. It’s not for the faint-hearted,” said Kate.

Traditional evaluation reporting can struggle with how to include negative findings that will be acceptable to clients. The real-time embedded approach to DE can make ‘speaking truth to power’ more effective and more likely.

Kate advised us that a DE plan and design needs to be as emergent as the initiative or strategy being evaluated, and traditional planning and contracting processes often aren’t set up to cope with the iterative nature of a DE. Whilst some commissioners of evaluation find the thought of a DE attractive, they can sometimes become uncomfortable with the iterative nature of DE design and implementation – it’s not what they are used to, and, some revert to commissioning more conventional types of evaluation.

It’s not all that common for commissioners and funders to be true partners with communities in an evaluation process… Moving to this model of co-production is a big shift that can feel scary and risky

‘Nothing about us, without us’

Another key element in DE is co-production – an equal partnership with the participants in the evaluation, for example, patients. Nothing about us, without us is at the heart of Kate’s DE approach.

“It’s not all that common for commissioners and funders to be true partners with communities in an evaluation process – for decision making on design and implementation, analysis and reporting to be shared. Moving to this model of co-production is a big shift for many, and stepping into this way of working can feel scary and risky when you’ve never done it before.”

Kate’s approach really resonated with participants. Q’s Evaluation and Insight Manager Natalie Creary, commented: “I have a warm and fuzzy feeling inside – I think I’ve found my calling. I will definitely use it at every opportunity moving forward. DE is an exciting approach to evaluation, it helps you to sense-check what you are doing as you go along and embeds an evaluation and learning culture from the outset. I like that it involves all stakeholders, so everybody owns it.”

And Evaluation SIG convener Emma Gibbard added: “The eight key principles reflect many of the top-tips we see today when guiding others to plan their evaluations. It fits well with QI, utilising similar tools, embedding co-production as well as supporting the on-going learning and development of the intervention through the use of real-time feedback.”

“One of the things Kate said that really resonated with me was that Developmental Evaluation should inspire as well as provide direction in the face of complexity. I certainly left feeling creative and inspired and felt that it provided another useful tool for the evaluation toolkit!”

A Health Foundation colleague said that they hope to grow a cohort of evaluators who can practice DE, but also recognised that “people go into evaluation because they want to observe, not lead – so it’s a skill shift.”

** Next up, DE tools and its approach to scaling up: read part 2 of the Developmental Evaluation training workshop report **